Telemetry data is the critical link between system performance and user experience, offering detailed, real-time insights that make smarter, faster decisions possible. It is valuable in any application where system performance and user experience are closely intertwined, such as streaming services and telecommunications. Telemetry involves collecting data from remote, inaccessible, or impractical areas. By continuously monitoring and analyzing system operations and user interactions, telemetry data helps organizations optimize operations and prevent issues before they impact users.

The concept dates back to the development of telegraphy and the ability to transmit data over wires. In the early 20th century, meteorological balloons were equipped with instruments to measure atmospheric pressure, temperature, and wind speed. Those measurements were then transmitted to Earth. The meteorological balloons demonstrated the core function of telemetry: gathering data from difficult-to-access locations and transmitting it for analysis. Telemetry became more widely known because of its use in space exploration, and it has continued to evolve into today’s sophisticated systems with wide-ranging applications.

By automatically capturing and transmitting data from truly diverse environments—ranging from deep-sea sensors to satellite systems—telemetry provides organizations with crucial perspectives. Data is typically gathered using sensors or other monitoring devices. Commonly collected telemetry data includes environmental conditions, operational metrics, performance indicators, and user interactions. Once collected and transmitted, telemetry data enables organizations to monitor, analyze, and optimize the performance and behavior of their systems, assets, and processes.

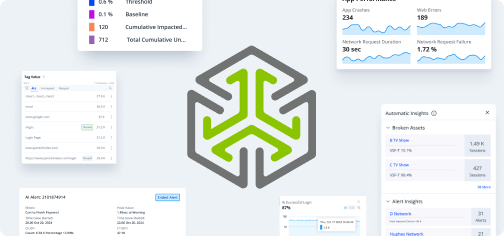

Conviva combines full-census client-side telemetry data with systems data into one session, giving companies real-time knowledge of any issues potentially affecting customers.

Telemetry data is categorized into four main types: metrics, events, logs, and traces (referred to as MELT). Traditional observability tools ingest this MELT telemetry data, which can pinpoint the likely cause of an issue. But troubleshooting teams still need to quickly understand the context of an issue in order to resolve it and deliver the best user experience.

Types of Telemetry Data

By offering an ongoing, dynamic view of system and user interactions, telemetry data serves a unique purpose in system monitoring and analysis. Understanding the nuances of each type of telemetry data enables organizations to use targeted monitoring strategies that maximize reliability, precision, and timely responses. Harnessing specific data types helps companies improve their decision-making processes. Telemetry data can be categorized into several types, each serving distinct purposes in system monitoring and analysis:

Metrics

These are quantitative measurements that offer valuable insights into system performance and behavior. Metrics are essential for gauging the health and efficiency of systems.

They include a diverse range of parameters, such as rate, error, and duration. These three are also known as RED metrics, and they are crucial for gaining insight into the health of systems and applications.

- Rate refers to the total number of requests received within a specific timeframe, providing a way to measure service demand.

- Errors refer to the frequency of errors produced by a software.

- Duration quantifies the time it takes for a software to respond to a request, reflecting its efficiency and responsiveness.

Telemetry data examples include speed, latency, CPU usage, memory utilization, network throughput, and user engagement. Detecting anomalies or changes to any of these helps organizations assess their operations and make informed decisions about how to improve system performance. By tracking and analyzing telemetry metrics, organizations can identify bottlenecks and troubleshoot issues when they occur, driving continuous improvement.

Events

Events record the details of specific occurrences or activities within a system. Events are triggered by actions or conditions such as user interactions, system errors, or other significant occurrences. For example, clicking a button in an application or encountering a service error are ways to generate an event. Events provide insights into what happens at precise moments. They are crucial for incident response and troubleshooting.

Unlike metrics, events do not accumulate data over time. Instead, they capture precise environmental details at the moment of an occurrence. Events include stack traces, user credential submissions, authentication processes, timestamps, user activity events, errors, and exceptions. Analyzing the granularity of events helps uncover root causes of issues. Events trace the sequence of actions leading up to database errors, facilitating effective troubleshooting and problem resolution within the system.

Logs

Logs are text records that offer a timestamped history of events. They are indispensable for debugging, security audits, and compliance tracking, providing detailed insights into a system’s behavior, status, and performance at discrete points in time. As the oldest form of telemetry data, logs can help with troubleshooting and gaining a deeper understanding of system activities.

By capturing detailed information about system events, errors, warnings, and more, logs enable operations teams to diagnose issues, trace system behavior, and identify potential areas for improvement. They play a pivotal role in documenting the operation of electronic devices, providing a comprehensive record of their activities and interactions for analysis, monitoring, and maintenance.

Traces

Traces are comprehensive collections of chronological records of the execution paths taken through software code. They provide a detailed representation of activities occurring within applications. Each span within a trace is timestamped and contains additional features and characteristics of the corresponding operation. By meticulously recording the contextual details of each span, traces facilitate a granular understanding of application behavior and performance.

They transcend network boundaries, enabling comprehensive monitoring and analysis of interactions across distributed computing environments. In systems with distributed architectures and microservices, tracing capabilities are indispensable for gaining insights into the flow of operations, identifying performance bottlenecks, and troubleshooting issues that span multiple components or services. Traces enhance observability and ensure the reliability and efficiency of complex, interconnected systems.

Benefits of Telemetry Data

Telemetry data can significantly enhance an organization’s operations and decision-making capabilities. It provides accurate and reliable data collection that leads to real-time insights into system performance and user behavior. Real-time insights can lead to real-time solutions that keep issues from impacting customers—but a fast resolution doesn’t matter when an issue is impacting few or no users. By correlating user experience insights with performance insights, teams can prioritize effectively to focus on the most important issues, and not spend time and money fixing the wrong problems. Telemetry data helps with this by providing insights into the impact of an issue.

Telemetry data also ensures that all actions and transactions within a system are logged, providing an audit trail that can be valuable in the event of a security incident or compliance review. This capability is essential for meeting regulatory requirements such as GDPR, HIPAA, or PCI DSS, which mandate strict data security and privacy protections. By maintaining comprehensive logs of system activities, organizations can demonstrate compliance with these regulations and protect themselves against legal and financial repercussions.

With the advent of the Internet of Things (IoT), the concept of telemetry has expanded to encompass the collection and analysis of data from a growing number of connected devices. As technology continues to evolve, telemetry will have even more applications.

Key benefits that telemetry data offers include:

Performance Optimization

By delivering real-time data, telemetry enables precise monitoring and quick adjustments to improve system performance and user engagement. Through continuous collection and analysis of real-time data, developers gain actionable insights into an application’s behavior, strengths, and weaknesses—and even its performance across diverse geographic locations and user devices. By tracking and trending data this way, teams can discern patterns, detect anomalies, and pinpoint areas for optimization or improvement. With a holistic perspective on system performance and user engagement, organizations can make informed decisions, prioritize enhancements, and deliver superior user experiences.

Resource Allocation and Cost Savings

With telemetry data, organizations gain granular visibility into resource utilization, demand patterns, and workload trends across their infrastructure and systems. Automated data collection helps with efficient resource utilization, leading to significant cost savings and better allocation based on demand and performance insights. Ensuring that resources are allocated effectively can also help with meeting future demand. By identifying opportunities for optimization and fine-tuning resource allocation policies, organizations can maximize their use of available resources while minimizing costs.

Traditional manual data collection methods are labor intensive and time consuming, but telemetry technology automates the process. This frees up many labor hours and significantly reduces the time required for data gathering and analysis. Automation streamlines operations and allows organizations to reallocate their human resources to more strategic tasks.

Increased Accuracy

Automated systems can process vast amounts of information quickly and consistently, reducing the likelihood of mistakes that can occur with manual data entry or analysis. Automation reduces human errors, providing more accurate and more reliable data to use as the basis for decision-making. Manual methods can also miss critical events or changes entirely. The continuous and automated approach of using telemetry data minimizes errors, ensuring that organizations receive comprehensive and precise data for analysis. With this accurate data, organizations can better assess risks, evaluate performance metrics, and adopt strategies to achieve their business objectives.

Improved Security

Continuous monitoring helps detect security threats early, picking up on irregular activities and anomalies and alerting organizations to potential threats or breaches. Upon detection of any threats, organizations can quickly implement countermeasures, mitigating the risk of successful breaches and minimizing the potential impact on operations. Telemetry data provides detailed context about the nature and scope of the threat, aiding in targeted responses. Those responses can include isolating affected systems, blocking suspicious IP addresses, or implementing additional authentication measures to limit access. Rapid response reduces the window of opportunity for attackers.

Comprehensive logs and traces from telemetry data provide valuable insights into past incidents—and learning from those prior incidents can shape and improve future security measures and strategies. A proactive approach safeguards the integrity of organizational systems and protects sensitive data from unauthorized access. By leveraging telemetry data for security monitoring and threat detection, organizations can enhance their resilience against cyber threats and instill confidence in their ability to safeguard critical assets.

How Telemetry Data Works

Telemetry data is distinct from other types of data in several ways, primarily because of its methods of collection, scope, and application. Its distinctive features—automatic and remote collection methods, real-time analysis, granular detail, operational focus, technological integration, and its crucial role in monitoring and control—set it apart from other types of data.

Method of Collection

Telemetry data is typically gathered automatically and remotely. Unlike traditional data requiring manual entry or direct collection, telemetry uses sensors and devices that transmit data back to a central system without human intervention. This is particularly useful for monitoring environments or systems that are not easily accessible, or where continuous data collection is necessary.

Sensors can include GPS modules, accelerometers, and gyroscopes, which are commonly used in IoT devices to detect movement. In the context of streaming services and IT environments, sensors might also include network monitoring tools that track bandwidth usage, latency, and packet loss.

Software agents are programs installed on devices or within applications to collect telemetry data. These agents monitor specific parameters, such as system performance, user interactions, or application behavior, and continuously gather data. The data collected by software agents or sensors can include metrics, events, logs, traces, and other telemetry data types generated by applications, servers, networks, and devices.

Instrumentation

To collect telemetry data effectively, organizations often deploy observability tools or agents within their systems and applications. These tools capture and transmit telemetry data to centralized monitoring systems or data repositories for further analysis. Sensors and software agents embedded in devices, applications, or systems continuously gather data on various parameters, such as performance metrics, usage patterns, or environmental conditions. This data is then transmitted through secure communication channels, typically using protocols such as HTTP, MQTT, or TCP/IP.

Data Ingestion

Once collected, telemetry data is ingested into centralized data repositories or monitoring platforms for processing and analysis. This may involve aggregating data from multiple sources, normalizing data formats, and storing data for efficient retrieval and analysis. Given its volume, velocity, and variety, telemetry data requires storage solutions that can handle large amounts of data efficiently while supporting fast access and retrieval.

Data Processing

Telemetry data undergoes processing steps to extract meaningful insights and identify patterns or anomalies. These can include data cleansing, transformation, enrichment, and aggregation to prepare the data for analysis. Storing telemetry data effectively means ensuring that data is accessible, secure, and usable for making informed decisions.

Analysis and Visualization

After processing, advanced analytics techniques can uncover trends, correlations, and anomalies. Visualization tools and dashboards can be used to present telemetry data in a clear and intuitive format, enabling operations teams to gain useful insights at a glance.

Alerting and Notification

Telemetry data may trigger alerts or notifications when predefined thresholds or conditions are met. These alerts may notify teams of potential issues, anomalies, or performance degradation, allowing them to take timely actions to address the situation.

By setting thresholds and alarms based on typical system behaviors, telemetry tools can trigger alerts when these thresholds are crossed, often indicating potential issues with security or performance.

Telemetry Data and Content Delivery

Telemetry data is designed to be collected, transmitted, processed, and analyzed almost instantaneously. This enables dynamic responses within systems being monitored, such as adjusting operational parameters in response to live data feeds. Other data types might be subject to batch processing or periodic reviews, which can result in delayed responses and missed opportunities for timely interventions.

With real-time data, organizations can gain immediate insights into potential issues that users are experiencing (such as buffering, failed playback, failed purchases, etc.), allowing them to respond swiftly and effectively to mitigate risks and prevent disruptions.

Scale and Granularity

Telemetry data can be captured at a very granular level and on a broad scale. For example, telemetry can record the number of concurrent viewers, stream quality metrics such as resolution and buffering, user engagement metrics such as clicks and interactions with an interface, and even the geographic locations of viewers. This level of granularity allows streaming platforms to analyze viewer trends, optimize content delivery, and enhance the overall viewing experience in real time.

Improving the User Experience

In the streaming industry, one of the primary purposes of telemetry data is monitoring and enhancing viewer experiences. Streaming platforms use telemetry to track metrics such as stream start times, buffering events, video quality, and user engagement patterns. This data allows platforms to diagnose and address issues before they negatively impact users. Telemetry also helps improve the user experience through providing deeper understanding of viewer preferences and behavior, enabling content recommendation algorithms to refine their suggestions. And it helps manage network load during peak viewing times to ensure smooth content delivery without interruptions.

Streaming platforms collect a wide array of telemetry data on viewer interactions, such as watch times, pause and resume patterns, selections after recommendations, and even abandonment rates on specific shows or movies. This allows platforms to go beyond merely tracking which genres or titles a user watches to understand nuances in their preferences. Platforms can use this data to tailor content recommendations more effectively to individual users. A deeper level of personalization enhances user engagement and satisfaction, encouraging longer viewing sessions and increased platform loyalty.

Resource Utilization

Telemetry data is vital for managing network resources, especially during peak viewing times. Start-up times, rebuffering rates, and overall bandwidth usage all help streaming services to assess the load on their servers. By analyzing this telemetry data in real time, platforms can adjust their network strategies to handle spikes in demand. If the data indicates an upcoming peak, the platform can dynamically increase server capacity or optimize data routing to minimize latency and buffering. This proactive approach ensures a seamless viewing experience for users, reducing frustration and potential service interruptions. Telemetry data also allows for the geographical distribution of content delivery networks (CDNs) to be optimized based on where demand is highest, further enhancing network resource allocation.

Platforms can integrate direct user feedback with telemetry data to better understand discrepancies between user-reported issues and what the telemetry data shows. This helps refine data collection and analysis methodologies, ensuring that the data captured is as useful and accurate as possible.

Telemetry data in the streaming industry is a critical tool that helps create a seamless and enjoyable viewing experience for users. It smoothly and reliably delivers the content that users want to see, even under the strain of high traffic.

Optimizing Telemetry Data with Conviva’s Experience-Centric Observability

Traditional observability tools focus on system health. System health is important, but it’s only half of the equation. Conviva’s approach integrates user experience insights into telemetry data analysis. This ensures that data reflects not just system performance but also how users interact with and experience the platform. Conviva’s real-time monitoring and sophisticated AI alerts allow organizations to detect and resolve issues efficiently while also understanding user impacts, enhancing user satisfaction and operational decision-making. For example, if users experience issues with failed playback or failed purchases, real-time data makes fast resolutions possible. Conviva’s Operational Data Platform can ingest 300 GB per minute of telemetry data, and it can compute 12 billion stateful metrics per minute. Continuous monitoring paired with computing power allows companies to have actionable insights into what their users are experiencing in real time.

Conviva’s Experience-Centric Observability approach applies a user-centric focus, rather than a system-centric one, to delivering customers the best possible digital experience. Experience-Centric Observability helps businesses understand how their customers are interacting with their technology, and what those interactions mean for long-term engagement.

For example, telemetry data helps manage bandwidth efficiently. For streaming services, this means delivering the best possible video quality while conserving bandwidth when necessary, in order to reduce costs and improve scalability. And with telemetry data, operational teams have access to detailed, actionable insights about how content delivery and viewer experience are intertwined. This supports better decision-making related to content distribution strategies, network resource allocation, and even content licensing and creation. Telemetry data can show companies how to connect system performance to business outcomes.

Conviva generates full-census metrics, rather than just sampling, which means that companies will be alerted to even abnormal cases when errors are detected. Sampling by definition never provides a full picture, and it can mean that critical issues go undetected. Telemetry data identifies issues before they affect a significant number of viewers. Scanning more than 2 billion metrics per minute, Conviva automatically notifies operations teams with detailed analysis when predefined thresholds or conditions are breached. A proactive approach to maintaining quality of service is key in an industry where user loyalty is heavily dependent on service quality. Conviva’s AI alerts enable organizations to take timely actions to maintain operational continuity, optimize performance, and deliver uninterrupted service to their users.

Enhance Telemetry Data Operations with Conviva

Telemetry data plays an important role in driving innovation and enhancing performance. By using telemetry data, organizations can respond more quickly and effectively to operational challenges while also strategizing for the future. Telemetry is integral not just to monitoring and analytics but also to operational excellence and viewer satisfaction. Streaming services can apply the advantages of telemetry data to offer the best possible user experience.

Conviva’s cutting-edge platform for in-depth, real-time telemetry data analysis and monitoring empowers these services to gain granular insights into viewer behavior, optimize content delivery, and ensure seamless performance across diverse devices and network conditions.